(Web)-Developers are used to stacks, most prominent among them probably the LAMP Stack or the more current MEAN Stack. Of course there are plenty around, but on the other hand, I have not heard too many data scientists talking about so much about data stacks – may it because we think, that in a lot of cases all you need is some python a CSV, pandas, and scikit-learn to do the job.

But when we sat down recently with our team, I realized that we indeed use a myriad of different tools, frameworks, and SaaS solutions. I thought it would be useful to organize them in a meaningful data stack. I have not only included the tools we are using, but I sat down and started researching. It turned out into an extensive list aka. the data stack PDF. This poster will:

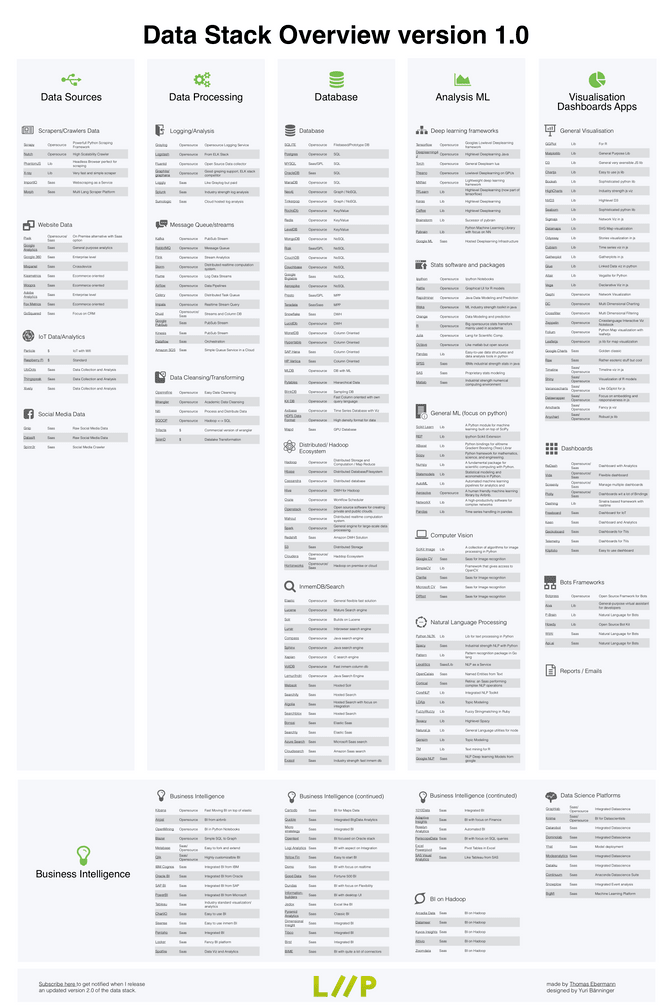

- provide an overview of solutions available in the 5 layers (Sources, Processing, Storage, Analysis, Visualization)

- offer you a way to discover new tools and

- offer orientation in a very densely populated area

So without further ado, here is my data stack overview Click to open PDF. Feel free to share it with your friends too.

Liip data stack version 1.0

Click here to get notified by email when I release version 2.0 of the data stack.

Let me lay out some of the questions that guided me in researching each area and throw in my 5 cents while researching each one of them:

- Data Sources: Where does our data usually come from? For us, it's websites with sophisticated event tracking. But for some projects the data has to be scraped, comes from social media outlets or comes from IoT devices.

- Data Processing: How can we initially clean or transform the data? How and where can we store the logs that those events create? Also from where do we also take additional valuable data?

- Database: What options are out there to store the data? How can we search through it? How can we access big data sources efficiently?

- Analysis: Which stats packages are available to analyze the data? Which frameworks are out there to do machine learning, deep learning, computer vision, natural language processing?

- Visualization, Dashboards, and Applications: What happens with the results? What options do we have to visually communicate them? How do we turn those visualizations into dashboards or whole applications? Which additional ways of communicating with the user beside reports/emails are out there?

- Business Intelligence:

What solutions are out there that try to integrate the data sourcing, data storage, analysis and visualization in one package? What solutions BI solutions are out there for big data? Are there platforms/solutions that offer more of a flexible data-scientist approach?

My observations when compiling the list:

Data Sources

- For scrapers, there are actually quite a lot of open source projects out there that work really well, probably because those are used mostly by developers.

- While there is quite a few software as a service solutions with slightly different focus, capturing website data in most cases is done via google analytics, although Piwik offers a nice on-premise alternative.

- We have been experimenting quite a bit with IoT devices and analytics, and it turns out that there seems to be quite a few integrated data-collection and analysis software as a service solutions out there, although you are always able to to use your own (see later) solutions.

- For social media data, the data comes either from the platforms themselves via an API (which is probably the default for most projects), but there are some convenient data providers out there that allow you to ingest social media data across all platforms.

Data Processing

- While there are excellent open source logging services like graylog or logstash, it can sometimes save a lot of time to use those pricey saas solutions because people have solved all the quirks and tiny problems that open source solutions sometimes have.

- While there are some quite old and mature open source solutions (e.g. RabbitMQ or Kafka) in the message queues or streams category, it turned out that there a lot of new open source stream analytics solutions (Impala, Flink or Flume) in the market and almost all of the big four (Microsoft, Google, Facebook, Amazon) offer their own approaches.

- The data cleansing or transformation category is quite a mixed bag. While on one hand there are a number of very mature industry standard solutions (e.g. Talend), there are also alternatives for end users that allow them simply to clean their data without any programming knowledge (e.g. Trifacta or Open Refine)

Databases

- Databases: If you haven't followed the development in the databases area closely like me, you might think that solutions will fall either in the SQL (e.g. MySQL) or the NoSQL (e.g. MongoDB) bucket. But apparently a LOT has been going on here, probably among the most notable are the graph based databases (e.g. Neo4J) and the Column Oriented databases (e.g. Hana or Monet DB) that offer a much better performance for BI tasks. There are also some recent experimental highly promising solutions like databases in the GPU (e.g. Mapd) or ones that only sample (e.g. BlinkDB) the whole dataset.

- The distributed big data ecosystem: It is mostly populated by mature projects from the Apache foundation that integrate quite well in the Hadoop ecosystem. Worth mentioning are of course the distributed machine learning solutions for large scale processing like Spark or Mahout, that are really handy. There are also a lot of mature options like Cloudera or Hortonworks that offer out of the box integrations.

- In Memory Databases or Search: Of course you the first thing that comes to mind is elastic(search) that proved over the years to be a reliable solution. Overall the area is populated by quite a lot of stable open source projects (e.g. Lucene or Solr) while on the other hand, you can now directly tap into search as a service (e.g. AzureSearch or Cloudsearch) from the major vendors. The most interesting projects I will try follow are the fastest in-memory database Exasol and its “competitor” VoltDB.

Analysis / ML Frameworks

- Deep Learning Frameworks: Obviously, on one hand, you will find the kind of low-level frameworks like Tensorflow, Torch, and Theano in there. But on the other hand, there are also high-level alternatives that build up upon those like TFlearn (that has been integrated into Tensorflow now) or Keras, which allow you to make progress faster with less coding but also without being able to control all the details. Finally, there are also alternatives to hosting these solutions yourself, in services like the Google ML platform.

- Statistic software packages: While maybe a long time ago you could only choose from commercial solutions like SPSS, Matlab or SAS, nowadays there is really a myriad of open source solutions out there. Whole ecosystems have developed around those languages (python, R, Julia etc.). But also even without programming, you can analyze data quite efficiently with tools like Rapidminer, Orange or Rattle. For me, nothing beats the combination of pandas and an ipython notebook.

- General ML libraries: I put the focus here on mainly the python ecosystem, although the other ones are probably as diverse as this one. With scipy, numpy or scikit-learn we've got a one-stop shop for all your ML needs, but nowadays there are also libraries that take care of the hyperparameter optimization (e.g. REP) or model selection (AutoML). So again here you can also choose your level of immersion yourself.

- Computer vision: While you will find a lot of open source libraries that rely on OpenCV somehow a myriad of awesome SaaS solutions (e.g. Google CV, Microsoft CV) from big vendors have popped up in the last years. These will probably beat everything you might hastily build over the weekend but are going to cost you a bit. The deep learning movement has really made computer vision, object detection etc.. really accessible for anyone.

- Natural language processing: Here I noticed a similar movement. We used NLP libraries to process social media data (e.g. sentimentanalysis) and found that there are really great open source projects or libraries out there. While there are various options for text processing (e.g. natural for node.js, or nltk for python or coreNLP from Stanford), it is deep learning and the SaaS products built upon it that have really made natural language processing available for anyone. I am very impressed with the results of these tools, although I doubt that we will come anywhere close in the next years to computers really understanding us. After all its the holy grail of AI.

Dashboards / Visualization

- Visualization: I was really surprised how many js libraries are out there, that allow you to do the fanciest data visualizations in the browser. I mean its great to have those solid libraries like ggplot or matplotlib, or the fancy ones like bokeh or seaborn but if you want to communicate your results to the user in a periodic way, you will need to go through the mobile / browser. I guess we have to thank the strong D3 community for the great developments in this area, but also there are a lot of awesome: SaaS and open source solutions that go way beyond just visualization like Shiny for R or Redash that feel more like a business intelligence solution.

- Dashboards: I am personally a big fan of dashing.io because it is simply free and it's in ruby, but plotly has really surprised me as a very useful tool to just create a dashboard without a hassle. There is a myriad of SaaS solutions out there that I stumbled upon when researching this field, which I will have to try. I am not sure if they will all hold up to the shiny expectations, that those websites sell.

- Bot Frameworks: Although I think of bots or agents more of a way of interacting with a user, I have put them into the visualization area because they didn't fit in anywhere else. P-Brain.ai and Wit.ai or botpress turn out to be a really fast way to get started here when you just want to build a (slack)-bot. I am however not sure if chatbots will be able to deliver the right results, given the hype with those.

Business Intelligence

- Business Intelligence: I thought I knew more or less the alternatives that are out there. But having researched a bit, boy was I surprised to find how much is actually out there. Basically, every vendor of the big four has a very mature solution out there. Yet I found it really hard to distinguish between the different SaaS solutions out there, maybe it's because of the marketing talk, or maybe because they just all do the same thing. It's interesting to compare how potentially business intelligence solutions are offering the capabilities of the before mentioned data stack, but given the variety of different solutions in each layer, I think more and more people will be tempted to pick and chose instead of buying the expensive all in one solution. There are however open source alternatives, of which some feel quite mature (e.g. Kibana or Metabase) while others are quite small but really useful (e.g. Blazer). Also don't judge me too hard, if I put Tableau in there, some may say it's just a visualization tool, others perceive it as a BI solution – I think the boundaries are really blurry in this terrain.

- BI on Hadoop: I had to introduce this category because I discovered that a lot of solutions are particularly tailored to working on the Hadoop stack. It's great to see that there are options out there and I am eager to explore this terrain in the future.

- Data Science Platforms: What I noticed too is that somehow that data scientists are becoming a target group of integrated business intelligence solutions or data science platforms. I had some experience with BigML and Snowplow before, but it turns out that there is a lot of different platforms popping up, that might make your life much easier. For example, when it comes to deploying your models (like Yhat) or having a totally automated way of learning models (e.g. Datarobot). I am really excited to see what things will pop up here in the future.

What I realized that this task of creating an overview of the different tools and solutions in the data-centric area will never be complete. Even when writing this blog post I had to add 14 more tools to the list. And I am aware of the fact that I might have missed some major tools out there, simply because it's hard to be unbiased when researching.

That is why I created a little email list that you can sign up to, and I will send you the updated version of this stack somewhere this year. So sign up to stay up to date (I promise I will not spam you) and write me a comment to let me know of new solutions or to let me know in the comments how you would have segmented this field or what your favorite tools are.

Click here to get notified by email when I release version 2.0 of the data stack.