5 years after the first edition, this weekend another “ 72 Stunden” (72 hours) event organized by SAJV took place. And as 5 years ago, we were responsible for the technical part. We were quite happy how it worked back then (read this and this), so we did the “never change a running system” and used Flux CMS for the whole thing. But instead of hosting the lot by ourselves, we decided to put everything on amazon web services to take advantage of all the things AWS offers.

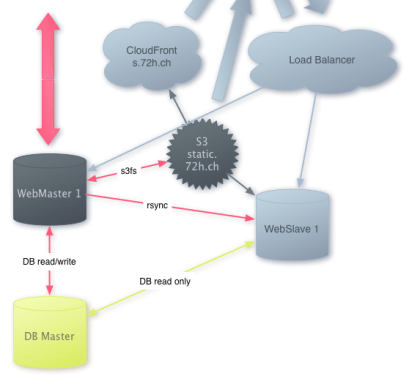

We seperated the “public” part and the “admin” part, so that if the site would be hit by many visitors, the admins still can work without hassle. Therefore we would have setup 1 EC2 Server for the WebMaster (the admin server), 1 EC2 Server with the MySQL Master, and 1 or more servers for serving the pages with 1 or more MySQL slaves. We separated DB and Web into different machines, so that we were able to scale them independently.

As it turned out, we didn't need that many instances, so we run with the setup graphed below. But it was reassuring to know, that if the load would go up, we just could start another Web-Slave or DB-Slave with a mouse click (actually, I even was able to control it from my iPhone while being it in the sun somewhere :))

For serving the static files, we used S3 and CloudFront, the Content Delivery Network (CDN) of Amazon. The really static stuff (like logos) we delivered from CloudFront, the stuff which changed from time to time (like CSS and JS) we served from S3. We didn't have proper version-naming of the files in place, so we couldn't use CloudFront with its long expiry times for those, but S3 itself was more than enough. This moving out of some requests also took some load off the servers.

For putting the files onto S3, we used s3fs. It worked pretty fine and we didn't have to change CMS code, we just had to point to the right directories. The only issue was that s3fs does several requests to S3 with each fstat, so we had to improve some things and take away some others again and serve them from EC2. s3fs does cache the actual content of the files (if you tell it to do so), but it checks every time you access it, if it changed on the S3 side. That's ok and usually what you expect, but in our case it produced 10 mio requests to S3 until Saturday (our gallery is fstat intensive :)). We then took the gallery pictures off S3 again and everything worked in normal boundaries. That cost us 10$ pretty unnecessary dollars :)

The templates, code and some other files needed by the CMS we didn't put into S3, but in the “normal” filesystem. We then synced the changes traditionally via rsync every 5 minutes to the WebSlaves.

We also used the “Elastic IP Addresses” and the Elastic Load Balancing feature. The Load Balancing feature nowadays even support sticky sessions, but we didn't need that (the people who needed sessions, went to admin.72h.ch). All in all a great feature, which allows you to set up new servers easily and take down not needed ones.

That admin.72h.ch part was also the only thing which we couldn't scale at a fingertip, mainly the DB Master part. In every traditional MySQL replication setup, the DB Master is the potential bottleneck and can only handle a certain amount of DB-writes. But as we knew that there was a finite and relatively small amount of admins, we lived with this. And we could take off the public traffic from those two servers, if we miscalculated (but you guessed it: It was not needed).

This whole setup for the approx 4 days cost us a little bit more than 100$, not bad for having a fully scalable setup, which could serve many many more visitors than we had. The actual costs were:

EC2: 64$ (mainly for 3 instances of High-CPU Medium Instance (c1.medium) running over the weekend)

CloudFront: 4.60$

EC2 EBS: 1.5$

Elastic Load Balancing: 2.70$

CloudWatch: 4.50$

S3: 14$ (as mentioned 10$ could have been saved…)

Data Traffic: 2.30$

And the rest for taxes and little stuff here and there.

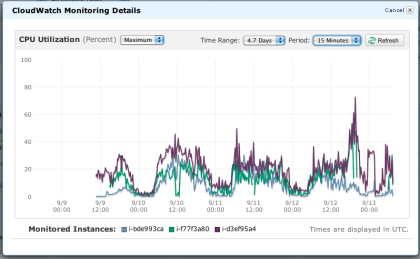

CloudWatch enabled us to watch the trend in CPU consumption, see the graph below (the peak on sunday evening was, because I turned off WebSlave1, which I should have waited until later..)

Together with CloudWatch, I also played a little bit with Auto Scaling, which would automatically add or remove new servers, if the load is high (or low). This was the only feature, which couldn't be configured via the webinterface of Amazon, so we didn't really put it into place, but I got it running with the CLI tools

All in all a very good experience even if we didn't need more servers than planned. We obviously just did plan very well :) But just one server in our little Data-Center would certainly not have delivered all of that that smoothly. We could enjoy the sunny weekend knowing that if we had to scale, we could do it with some mouseclicks (or as said from the iPhone). And for 100$ it's even great bang-for-bucks, especially for events where the whole power is only needed a few days every few years.

BTW, we could have used Amazon RDS here instead of our own MySQL servers, but as we had zero experience with it, we preferred not to use it. The DB was a central part, after all.