In this post, I’ll explain how I used both tools to build a chatbot that can answer questions about the Swiss Parliament, and a workflow for transcribing meeting recordings.

Understanding AI Workflows and Agents

Workflows and agents are theoretically not the same, but the terms are often used interchangeably. A lot has already been written about that elsewhere, so I’ll keep it short.

What Is an AI Workflow?

An AI workflow is a set of steps that happen in order to solve a task. Each step can use a model or a tool to handle data. These workflows usually follow the same path every time. Sometimes they can also go back in the flow if the result is not satisfactory yet, but the structure remains predictable.

What Is an AI Agent?

An AI agent is more flexible. It takes a goal and figures out how to get there. It can choose which tools to use – like web search, database lookups, API calls – and can adapt its plan depending on what it finds. It's more like: here's the task, here are the tools, now figure it out.

These two approaches can be combined, of course.

Introducing LangGraph and LangFlow

Although they have similar names, they are unrelated in terms of organization. Technologically, both are based on LangChain, a widely used library for AI and LLM-related tasks.

LangGraph

LangGraph is a framework for creating AI workflows using graph-like structures. Developers define nodes (tasks) and edges (data flow) to model complex, dynamic processes. It's all done in code (Python or JavaScript), which makes it powerful but requires coding knowledge.

You can visualize the code and there are some UI tools to help follow the workflow, but it’s not designed for building workflows via UI. If you choose LangGraph, you're writing the code directly. It includes helpers to use agents with tools, making it ideal for agent-based setups as well.

LangFlow

LangFlow is another framework for building AI workflows and agents, with a focus on modularity and ease of use. Workflows are configured via a web-based UI, which is quite powerful. For example, you can change component code directly in the UI – and it works well.

The downside is that you're locked into editing code through the UI, without the comforts of your usual development environment: no AI companions, autocomplete, or version control.

While it's easy to get an agent or workflow running via drag and drop, I often ended up editing component code. Fortunately, components are small and easy to adjust. LangFlow is definitely a "low-code" platform, not a "no-code" one.

In terms of flow logic, some features are still missing. You can’t return to a previous step in a flow – it always goes forward. But you can loop through lists of entries.

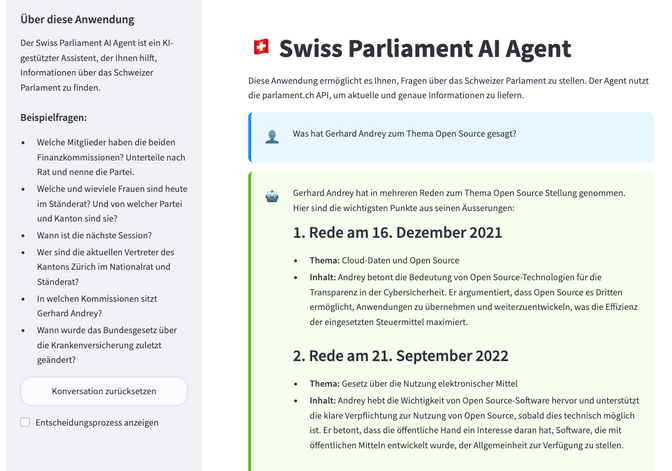

Building the Swiss Parliament Bot with LangGraph (and Streamlit)

A few weeks ago, Gerhard and I had another AI hack morning. While we didn’t make much progress that day, it sparked an old idea: a chatbot for parlament.ch – similar to ZüriCityGPT and Alva – could be useful.

Client-side rendering made the site almost impossible - or very time consuming - to crawl for another LiipGPT style implementation. On the way home, I thought of pulling data directly from the (not official, but public) Swiss Parliament API using workflows and agents instead.

The result was the Swiss Parliament Bot, which constructs and executes structured queries without using vector stores or crawling the site. I first tried the agent route with tools, but it didn’t work well. Then I switched to a traditional workflow with an evaluator node – skipping AI agents and tools.

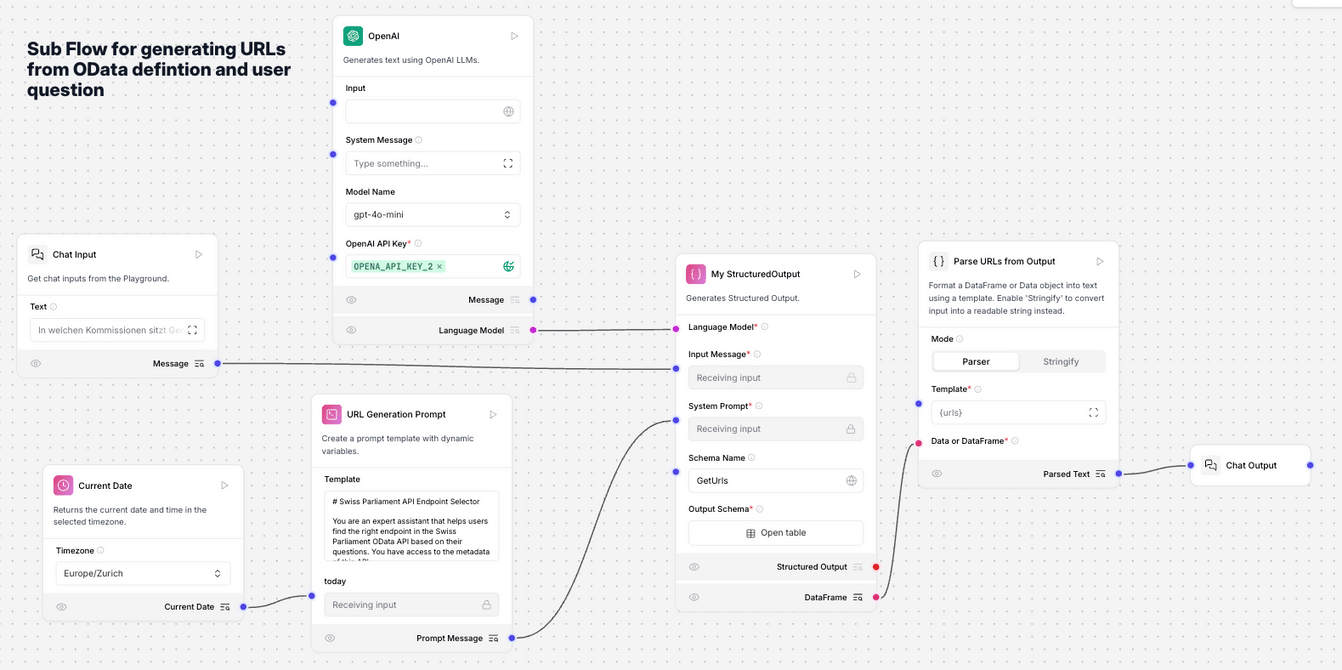

The core idea was to give the LLM a long prompt with all the OData definitions of the API, like:

## Available Entities and Parameters

The Swiss Parliament API has the following main entities with their key parameters:

### MemberCommittee

- ID, Language, CommitteeNumber, PersonNumber, PersonIdCode, CommitteeFunctionName

- FirstName, LastName, GenderAsString (f/m), PartyNumber, PartyName, CouncilName

- CantonName, ParlGroupNumber, ParlGroupName

[etc...]Then I told the LLM to construct appropriate queries. Since OData is well documented, the LLM could generate valid URLs, with some extra hints.

Another LangGraph node called the URLs and inserted the results into a new prompt. This prompt evaluated whether there was enough data to answer the question. If not, the flow returned to the URL generation step to try again, avoiding duplicates. This retry loop addressed two issues: invalid results or insufficient data.

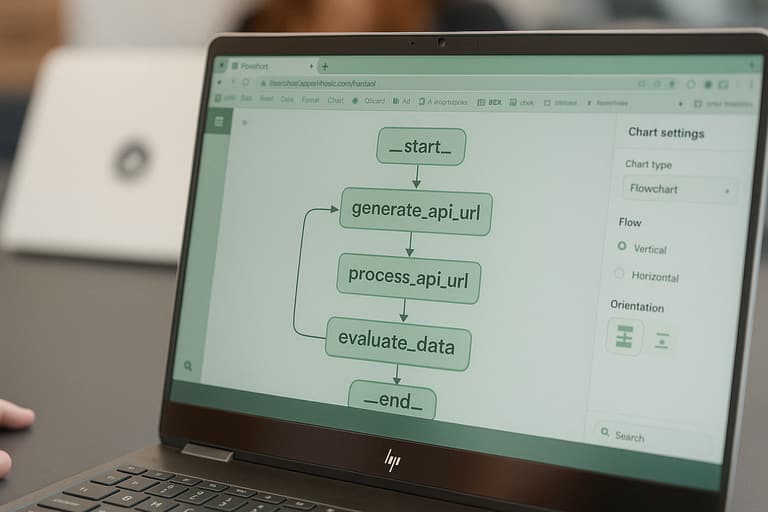

Once enough data was collected, the flow moved to the response node to write the answer. The whole flow looks more or less like the one in the title banner.

Limitations include the lack of semantic search (the API doesn’t support it), so exact terms are needed. It can also be slow, depending on how many URLs and API requests are needed.

LangGraph gave me the flexibility I needed. Since it's just Python code with library support, I could shape the flow exactly how I wanted, and build it quickly.

You can try the bot at swissparliamentbot.gpt.liip.ch. It’s still a prototype, so some queries may not work as expected.

Transcribing and Summarising Meetings Locally with LangFlow

I love coding, and with AI companions to start it's not the time consuming project it once was, but I’m always curious about new tools. That’s how I found LangFlow. I wanted to build a workflow to transcribe and summarise recordings using Transcribo, an open-source tool from Statistisches Amt Kanton Zürich. It runs locally, is based on Whisper, handles Swiss German well, adds diarization, but lacked an API.

So I quickly wrote an API wrapper and reimplemented its text extraction in Python. That’s now merged into the main repo and Transcribo can be used in automated flows.

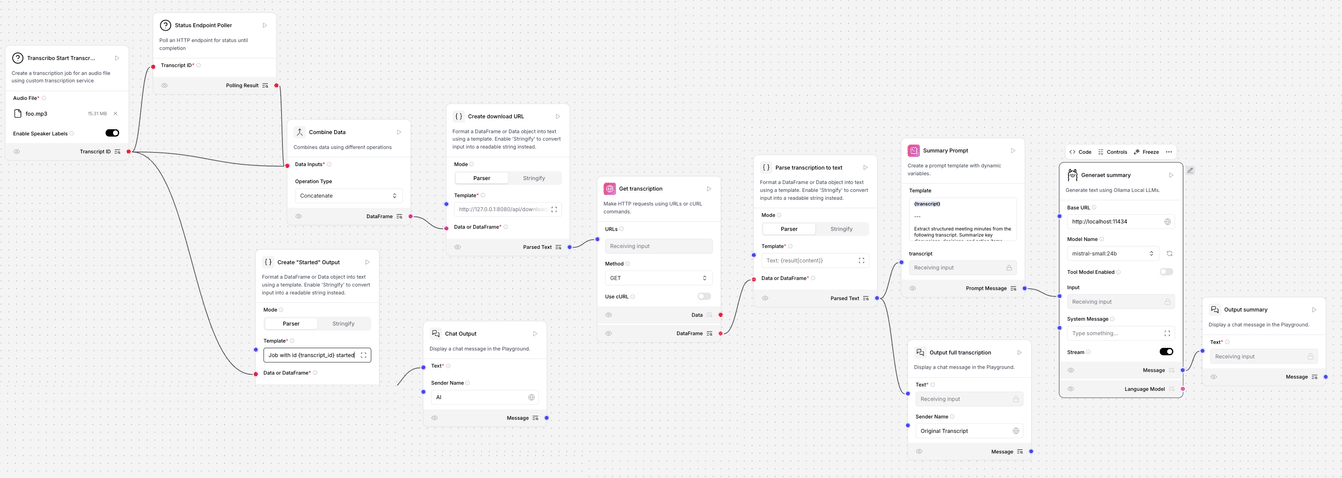

Then I built a LangFlow demo with these steps:

- Upload audio

- Transcribe via the Transcribo API

- Poll the API until transcription is done (adjusted an example component for this)

- Get the transcript

- Summarise using a local Ollama instance (with mistral-small as LLM)

A few clicks later, I had a transcript and summary – fully local and open source.

It wasn’t the most advanced workflow, and I could have done it in plain Python or Node.js, but LangFlow made it fast and easy enough.

Adding a Svelte frontend

LangFlow doesn’t support uploading arbitrary files via its chat interface – only images and text documents for now. To use different audio files, I had to upload it in the component workflow manually, which isn’t ideal for public use.

I explored the LangFlow API to support file uploads. (Hint: upload the file to the workflow and patch it via the tweaks property when calling the chat API.) It worked! Now I have a svelte based UI that uploads a file, runs the workflow, and returns a summary. The workflow itself can be edited later in the LangFlow Admin UI, if it needs further adjustments.

The chat API still has issues – streaming doesn’t always behave as expected. But maintainers are aware and working on a better version.

LangFlow is clearly a low-code tool. I needed to adjust some component code, but with a bit of developer knowledge it’s totally manageable.

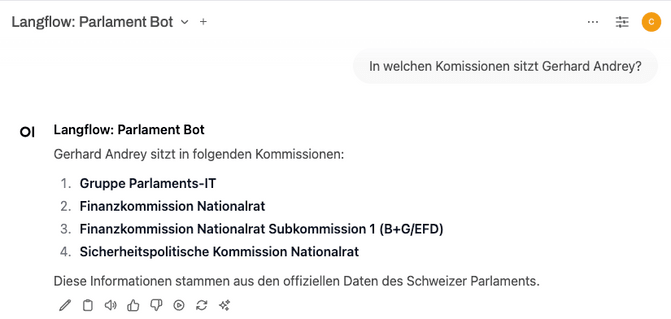

Recreating the Parliament Bot in LangFlow

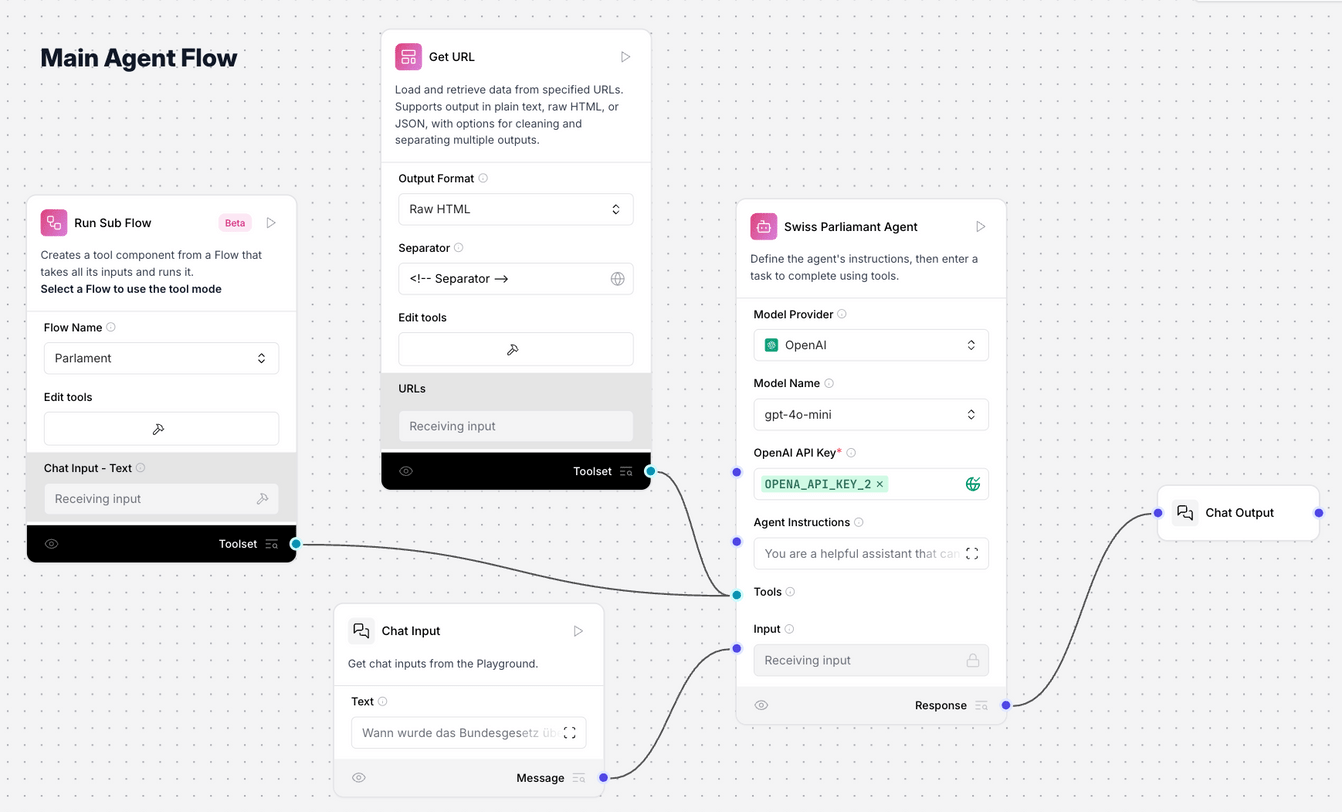

After building the simpler workflow, I tried to recreate the Parliament Bot in LangFlow. But I hit a limitation pretty soon: you can’t go back in a flow, which was essential to my LangGraph setup. I would have had to write one large component instead – not the point of LangFlow.

So I switched to the agent approach, which LangFlow supports well. I added a URL fetcher and created a URL generator tool, and linked them to an agent. The URL generator was its own sub flow, connected as a tool to the main agent. It’s great that this is possible, but debugging was tricky. The whole thing worked in the end, but I didn’t spend time on all the optimisations I had in LangGraph. The proof of concept was done and I learned a lot already about LangGraph.

Integrating with Open WebUI

A third piece we’re exploring is Open WebUI – an open-source chat interface for LLMs. It supports tools, file uploads, and custom functions and is very customisable.

Open WebUI can be used to embed workflows from LangFlow or LangGraph behind a chat interface via it's pipe and filter functions. Think of it as building your own ChatGPT, powered by custom workflows and agents. Since it supports tool calling and custom APIs, you could trigger a LangGraph or LangFlow workflow based on user input.

It runs locally and supports multiple LLM backends - self-hosted or in the cloud, open source or commercial one - making it a solid choice for internal AI tools without relying on proprietary providers.

Final Thoughts

These two examples showed where LangGraph and LangFlow shine. LangGraph is great when you need full control and want to build more complex flows (like the Swiss Parliament Bot). LangFlow is great for simpler flows (like transcribing and summarising) or agent-based setups without much coding.

The line between when to use each tool is blurry, and both tools are evolving quickly. It’s hard to keep up, but also very exciting.

Combining Open WebUI with LangGraph or LangFlow and a more complex RAG tool like LiipGPT might be the next step for many projects. It adds usability and integration power that can turn flows into usable tools. And since it’s all open source, it’s definitely worth exploring – especially for organisations looking into these technologies.