We have set up a Varnish cache in front of the application to serve as a reverse proxy. All requests that are already cached in Varnish are answered directly by it. This is a simple lookup done by the highly performance optimised Varnish system, making the process extremely efficient. These requests take only a few milliseconds to be answered.

Metrics

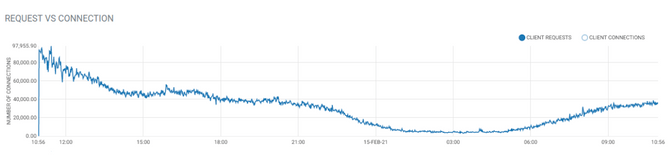

According to the Varnish monitoring, request frequency over 24 hours looks like this:

This screenshot was taken on a typical day, with nothing unusual going on. During the day, Varnish is serving 40'000 to 100'000 requests per minute, with peaks going even higher. At night, activity drops to much lower rates.

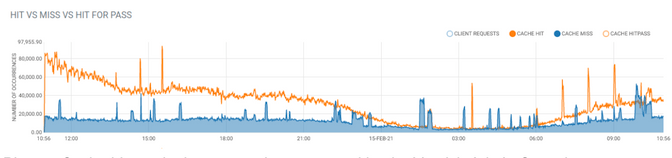

About ⅔ of all requests are cache hits in Varnish. The hit ratio is particularly high during high load. This takes away a lot of load from the backend application.

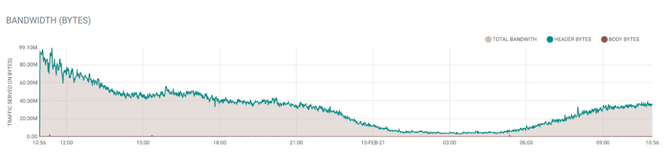

This activity also leads to considerable bandwidth consumption:

Getting the Most out of Caching

All requests to M-API need to be authenticated. To achieve a high cache hit rate, we use the "user context" pattern to cache by groups of API consumers with the same permissions rather than per authenticated user. Read more about this pattern in Going Crazy with Caching - HTTP Caching and Logged in Users.

We also configure Varnish to clean up the query parameters and Accept-Language header to increase the cache hit rate further.

Because the application is aware when data is changed, we configure a high cache lifetime, and allow the application to actively invalidate the cache as needed. We use the xkey mechanism of Varnish to tag all responses and invalidate them by tag.

Many of the search results are lists of products, such as those from free text searches or category browsing. When data changes, the ordering of search results may also change. As we use paging, xkey alone is not sufficient. A change affects not only the page that previously included the product but also all subsequent pages. We would have to invalidate all lists whenever any product changes, which would render the cache pointless.

Instead, we use edge side includes (ESI). The list responses only include ESI instructions to reference the actual products to be included in the list, but not the data. This makes list generation very efficient, allowing us to use a low cache lifetime for them. Varnish interprets the ESI to fetch the individual products and assemble the result for the client. The product subrequests are cached for a long time and tagged with the product ID, and thus can be invalidated. This ensures that even if the list response is cached, the items in the list are updated immediately.

We also configured the "grace" behaviour, where Varnish is allowed to serve stale content if the backend application is not responding. In most M-API use cases, slightly outdated information is better than no response.

Server Application

Not everything can be handled by HTTP caching. For requests that can not be cached or when a response has not been cached yet, the backend response time is crucial. Modern PHP, and particularly the Symfony framework we used, is optimised for quick response times.

PHP is by design well suited for stateless applications. In M-API, each request is fully independent, allowing easy horizontal scaling. Our cloud setup monitors application load, automatically spinning up more instances when demand is high and shutting down instances when load is low.

To optimise response times, we used a profiler to identify the bottlenecks and verify that the changes actually led to improvements. We identified data that we stored in an application cache (Redis) to reduce queries to MySQL. After that, a significant part of the processing time was spent converting objects to JSON. We explored different options to speed up this process, and ultimately built a solution that was magnitudes faster. Read more about that in this blog post.

With these measures, we achieved response times of around 50 milliseconds for product responses. A single product can contain several hundred KB to over a MB of JSON data.