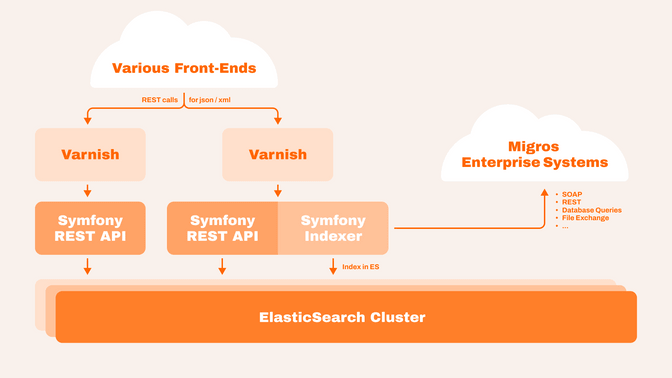

Migros has various websites and a mobile application that all need to display product data. We built the M-API, a Symfony application that gathers the data from the various systems, cleans it up and indexes it with Elasticsearch. This index can be queried in real time through a convenient REST API with JSON and XML output. The M-API handles several million requests per day and does well even during traffic peaks.

API Architecture

The documents in Elasticsearch are large and contain a lot of nested data. We decided to de-normalise all product-related data into each product record to make the lookup faster. When any part of the product information changes, we rebuild the information and re-index that product. The API offers query parameters to control the amount of details desired by the client.

The Symfony application is built around domain model classes for the data. When indexing, the models are populated with data from the external systems and serialized into Elasticsearch. For querying, the models are restored from the Elasticsearch responses and then re-serialized in the requested format and with the requested amount of details. We use the JMS serializer because it can group fields to control the level of detail and even allow us to support multiple versions of the API from the same data source. We wrote a custom serializer for JMS to improve performance.

There is, of course, some overhead involved with unserialising the Elasticsearch data and re-serializing it, but the flexibility we gain from this is worth the price.

In front of the M-API, we run Varnish servers to cache results. We use the FOSHttpCacheBundle to manage caching headers and invalidate cached data as needed.

A separate API

The M-API is a separate Symfony application and does not provide any kind of HTML rendering. It is only concerned with collecting data, normalising and indexing, and delivering information through a REST API. This allows for multi-channel strategies.

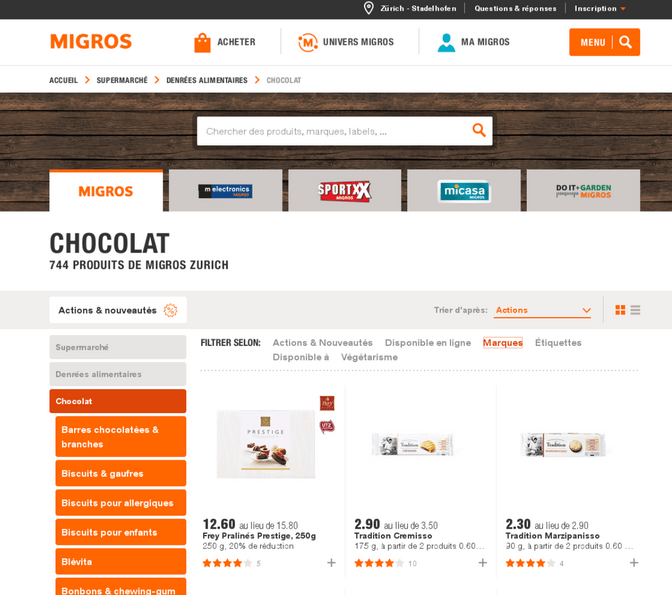

Systems built on the M-API include a product catalog website, a mobile application, a customer community platform and various marketing sites about specific sub-sets of the products. Each of these sites can be developed independently, possibly with technologies other than PHP. While the product catalog was implemented as a Symfony application, the mobile app is a native IOS and Android app, and many of the marketing sites are done with the Java based CMS Magnolia.

Thanks to the M-API, such web applications can now focus on their use cases and find the data easily accessible instead of having to re-implement importing the data they need from the various sources. This saves effort and time, while at the same time improving the quality of the data since improvements can be done centrally in the M-API system.

The data gathering is rather complex. The M-API collects:

- Core product data from the central product catalogue and pricing systems

- Images that are prepared for the web via the CDN rokka.io

- Meta data such as chemical warnings or product recommendations from data warehouse analyses

- Inventory information from the warehouse management system and a store search

- Popularity data based on customer feedback and Google Analytics

The development process

Together with the client, we chose an agile process. The minimum viable product was simply to provide the product data in JSON and XML format. After some months, the point of having enough functionality available was attained and the M-API went live. In the last 10 years, we constantly added new data sources and additional API end points, releasing in two-week sprints.

To provide a stable API, we used API versioning so that consumers can upgrade at their own schedule. Every request has to be sent with a version header. This is important to avoid having to synchronise deployments with many clients. Thanks to the serialiser component, we could handle the different versions without duplicating the data, adapting the data to old formats on the fly. We also maintained a changelog to help upgrade the client.

To be able to run the same codebase on the integration system as on production without leaking features in production prematurely, we used feature flags.

Indexing Architecture

During indexing, we de-normalise a lot of data to make querying as fast as possible. For example, the whole breadcrumb of product categories is stored within the product to have the full information available with just one Elasticsearch request. For this, we need a lot of data available during indexing. To make this feasible, we copy all data that is slow to query to the local system (into a MySQL database in our case, but it's essentially a cache with serialised response data. We could use any persistent storage system) and index from that database. The indexing process also caches intermediary data in Redis for speedup. These optimisations allow us to further split the process into data gathering and then indexing. Elasticsearch runs as a cluster with several nodes, mainly because of the elevated load of indexing the data.

The main data import runs a series of Symfony commands that update the local MySQL database with the latest information. This is parallelised through workers communicating over RabbitMQ. Once the message queue for data gathering is empty, the second part of indexing into Elasticsearch starts, again using workers to parallel the tasks.

One challenge was that to change the structure of the Elasticsearch index, you need to rebuild it. The data is several hundreds of thousands of documents, so the indexing process takes quite some time.

To optimise this:

- New code is rolled out without going online

- A new index is created by copying documents from the old index (faster than a complete re-import)

- Once completed, the new index is activated

This strategy saves time and system resources.